LeetCode vs. Reality: Why Coding Interviews Are Stuck in 2010

AI has rewritten how engineers work, but somehow, not how we get hired. Here’s why the old rituals persist, and how to prepare for the new ones.

I’ve been around long enough to see a few full cycles of how engineers get interviewed.

We had the in-person whiteboard rounds, where matching semicolon indentations with squeaky dry-erase markers felt like half the battle. Then came the “modern practical” phase where everybody got to interview on a loaner laptop running Eclipse. The first time this happened to me, I remember thinking, “Wait, I have to write real tests now!” Eventually everything moved online with HackerRank, CoderPad, and take-home assignments in GitHub.

But now we’re in the age of AI, where the IDE itself can vibe its way through an application, and somehow interviews haven’t changed at all.

We’re piloting drones through the Mars atmosphere, and being interviewed for parallel parking skills.

That’s what fascinates me: the work is clearly evolving, but the rituals around hiring haven’t.

Why LeetCode anyway?

LeetCode-style interviews are one of the most ubiquitous technical screens out there. Which, on its face, is kind of silly. I’ve been in this industry for fifteen years. You don’t think I can code? I once rewrote a legacy monolith myself. In the snow. Twice.

Still, let’s be fair for a second. Think about the constraints companies face when designing interviews. The problems they use have to:

Engage weaker candidates without losing them

Challenge stronger candidates enough to see them think

Fit everything into thirty minutes with time for questions afterwards

Require coding skills

Be judged consistently across teams and levels

If we’re honest, these interviews don’t exist because they’re realistic; they exist because they’re scalable. When you’re trying to evaluate thousands of engineers across dozens of teams, you need something consistent and gradable.

Sure, you can make a great chicken sandwich. But McDonald’s can make ten thousand identical ones in a second split across six continents. Thats what hiring processes are emulating: repeatability over realism.

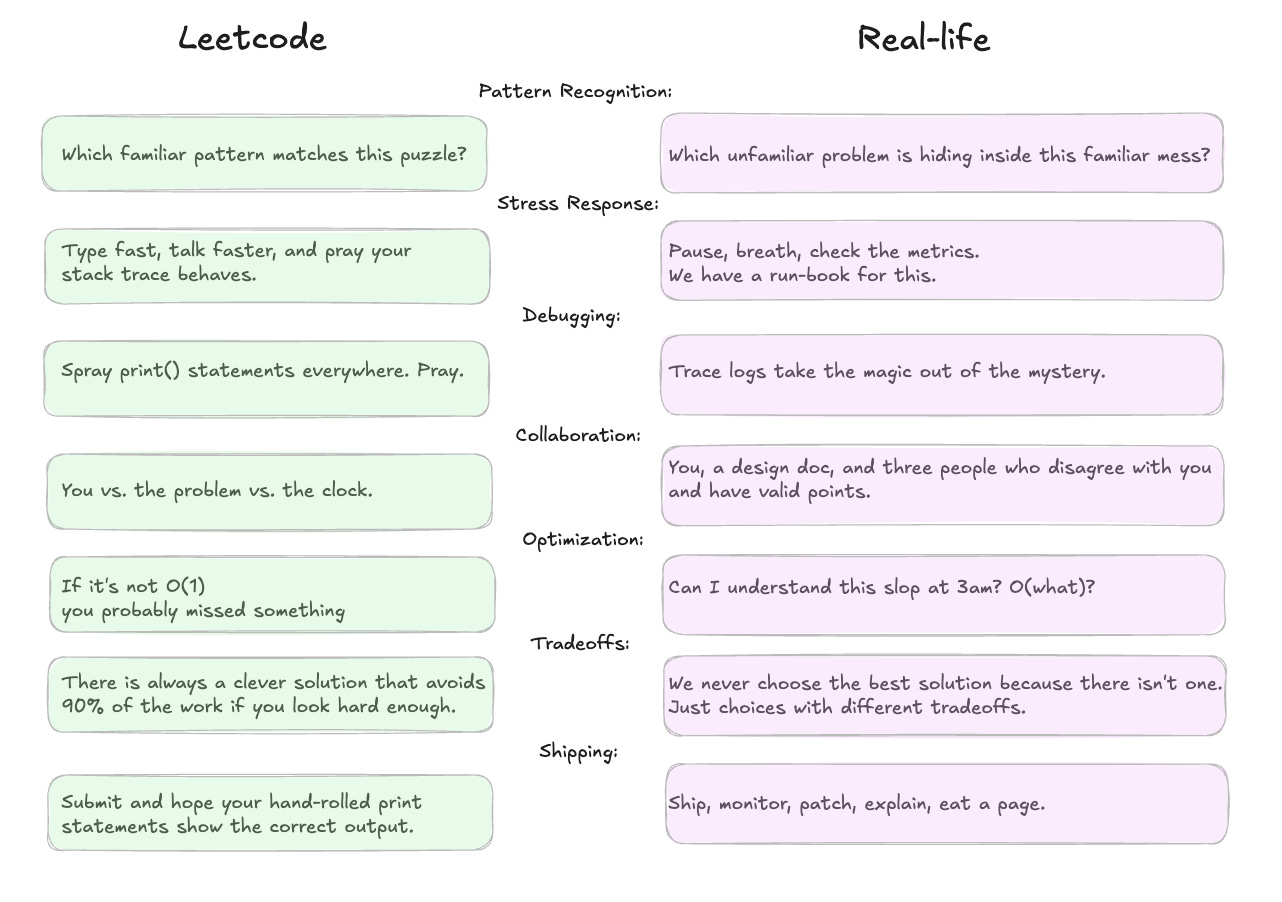

The problem is that what they measure no longer maps to what the job actually is.

They reward engineers who can stay calm under artificial pressure, recall syntax from memory, and pattern-match problems they’ve seen before. But the skills that matter in today’s workplace: reasoning about systems, communicating trade-offs, and guiding AI tools, don’t show up in a timed puzzle.

Software has already shifted away from raw code toward systems, trade-offs, and collaboration. Yet we’re still testing candidates as if success depends on how fast they can remember syntax.

The problem isn’t that LeetCode interviews are unfair. It’s that they’re measuring a version of engineering that doesn’t exist anymore.

Playing the old game with new tools

If the game’s outdated, fine. We can adapt. Here’s how I turned AI from a distraction into my best weapon.

Step 1: Have a specific plan

LeetCode, like most of software, is more about pattern recognition than anyone likes to admit.

The fastest way to get better is to start with a naive solution, figure out where it’s slow, and then match the right data structure or algorithmic trick to make it faster. Need to query a key quickly? Use a Map. Need to find unique values? That’s a Set.

And yes, the only way to get better at this is to practice. (What did you think I was going to say?)

Start with LeetCode’s Top 150 to get a good baseline. For big tech companies the general rule is that you should be able to handle any Medium problem, and some Hard problems. The smaller the company usually the lower the bar on this.

Step 2: Use AI to move faster

One thing I always forget between interview seasons is just how much repetitive setup these problems require.

I use my own IDE, so my workflow looks like this:

Create a new solution file

Set up a template class with the right args

Write a main method to execute

Add a few sample tests and just enough prints to verify they run

Only then do we reach the interesting part: actually solving the problem.

Those first four steps are a lot of clicking and typing for almost no learning value. Thinking up test cases is useful; but writing the for loops to run them isn’t. It’s busywork. It doesn’t teach you anything new.

So let the model handle it!

Paste the problem context, ask it to generate the file, and give it a short, consistent recipe for test cases. Why are we still typing boilerplate in 2025?

The skill you want to practice is how you think through a problem. The rest is a waste.

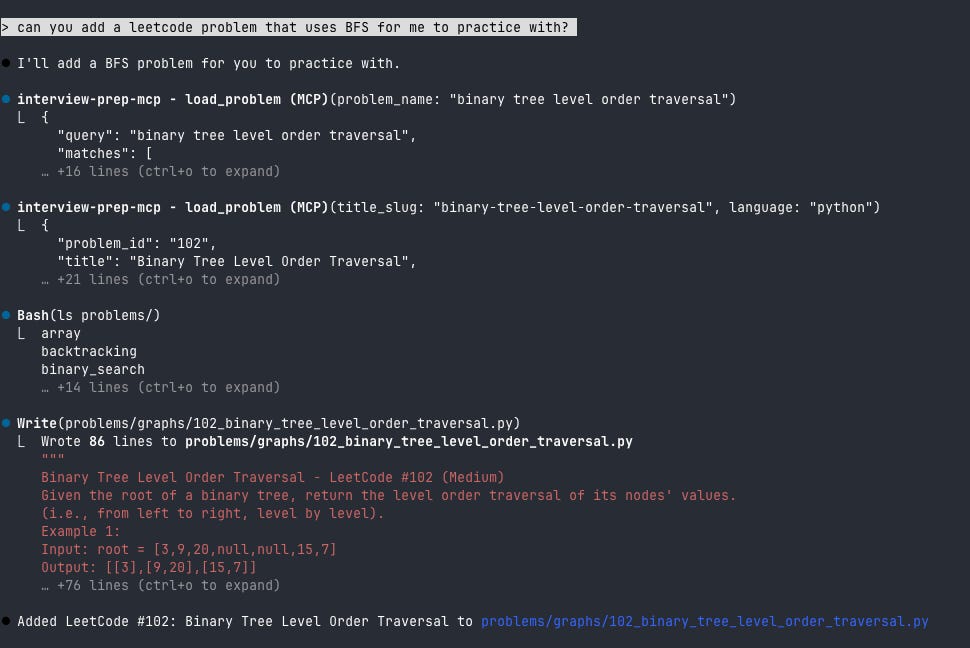

I even wrote a small MCP server that hooks into LeetCode’s public GraphQL API to automate this. My Claude Code agent connects through that server, finds problems by number or title, and generates ready-to-run files in whatever language I’m practicing.

It’s simple, but it saves a surprising amount of time and keeps me focused on the thinking, not the typing.

Step 3: AI as tutor, not answer key

When I get stuck, it’s tempting to peek at the solution for that quick flash of insight.

But that’s the fastest way to short-circuit learning. Resist the spoiler.

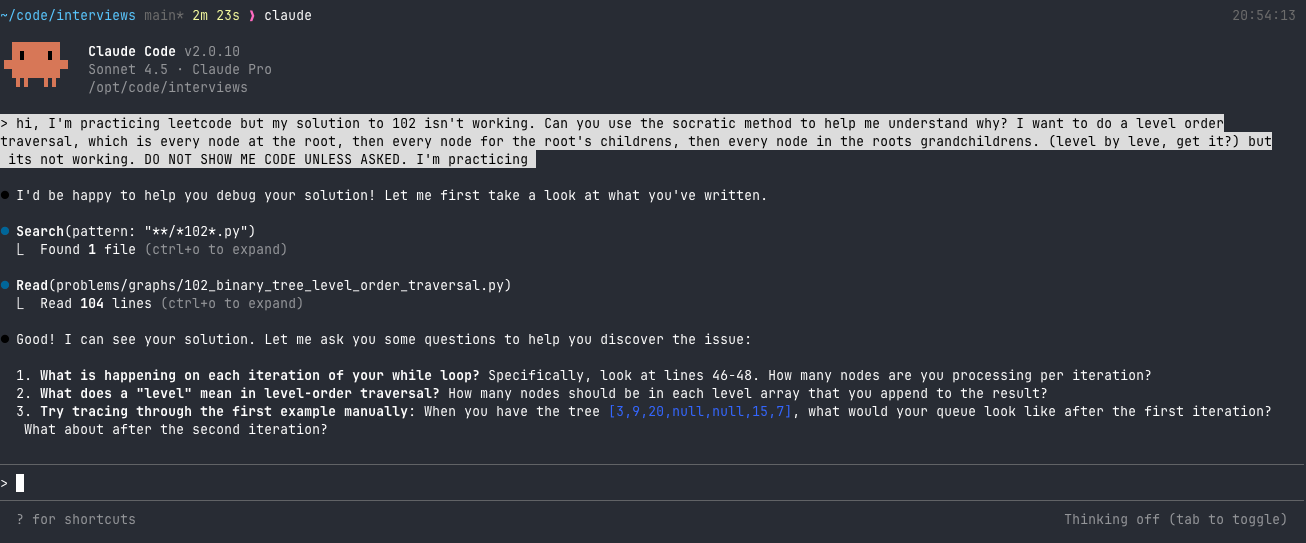

Instead, treat the model like a tutor. I explain in plain English how I’m approaching the problem, where I think it’s breaking down, and then ask it to nudge me using the Socratic method.

Pro-tip: always add the line no code samples unless asked or the model will be extra helpful and finish the problem for you.

This is the number one technique that has improved my ability to do these problems. It’s easy to lull yourself into thinking you understand the solution when you’ve already seen the working code.

It’s much harder to fool yourself when the model is asking you specific questions about your assumptions.

Guided discovery sticks better than reading a finished solution. Senior devs can prompt for “vague hints”; juniors might need more structure. Either way, store your preferences so the model remembers your learning style across sessions.

Don’t sweat the syntax

AI is changing the abstractions we work in as engineers.

Yesterday, we focused on for-loops and OOP singleton patterns.

Tomorrow, it’ll be natural language and automated test results.

If I could tell my younger self one thing during those nerve-wracking whiteboard interviews, it’d be this: stop sweating the syntax.

We’ve entered a world where fluency means understanding abstractions, debugging with the right tools, and guiding models to produce correct code for our exact use case.

Yet LeetCode interviews still reward the opposite: syntax recall across multiple languages and the ability to type fast under pressure.

I don’t expect interviews to change overnight.

But by adapting how we prepare, we can use the same tools transforming engineering itself to challenge how we prove we’re “qualified.”

Someday, interviews might finally mirror how we actually work: messy, collaborative, context-driven, and yes, assisted by AI.

Until then, we can at least prepare like it’s 2025, not 2010.

Next post, I’ll dive into why System Design interviews are quickly becoming the most relevant part of the process — and why AI makes them more fun (and useful) than ever.

Boxes and arrows will probably outlive us all. But the coding rounds? They’re starting to feel like a relic.