What size LLM can I run locally?

And why do they each seem to be good at answering specific types of questions?

Lately there have been tons of AI tools popping up that run right on your computer but tap into the big players like OpenAI, Anthropic, and Google for the heavy lifting. Sure, these API services are powerful, but there are only so many $20 subscription I’m interested in.

That's where apps like Ollama and LM Studio come in handy. They let you download language models straight from HuggingFace and run them locally. But here's the thing—with so many different models out there, how do you figure out what size model you should actually be running on your machine? You've got to balance how fast they generate responses against what your hardware can handle, and that's going to be different for everyone.

By the time you finish reading this post, you'll have a clearer picture of how different models perform across various tasks, so you can make a smart choice for your own setup. Let's dive in!

Methodology and Setup

To gather the performance metrics we need, we’ll run a pre-defined set of prompts against a series of local models. The prompts will increase in complexity, and the models will also increase in size. By graphing the response times we’ll be able to visualize the tradeoff between the two.

What models will we test with?

Language models use a parameter count as a way of measuring their complexity. The easy way to think about it is:

Less parameters = knows less stuff = will run faster

More parameters = knows more stuff = will run slower

Here’s a few different models that we’re going to use for testing, along with their sizes.

Now, the 32B param model is right at the limit of what my M1 Pro macbook can support. I don’t have a dedicated GPU which is what provides the real horsepower for these type of performance evaluations. For this analysis thats fine, because what I’m interested in is what works for my setup, not what runs the absolute fastest possible. (Github so you can run this on your own setup).

For context, the latest models from the large AI labs are larger than 400B params and require entire datacenters to run.

What prompts will we test with?

I chose five different prompts which focus on different tasks I use day to day.

Factual recall: "What year did the Industrial Revolution begin?"

Conceptual explanation: "Explain why the sky appears blue in simple terms."

Analytical reasoning: "Solve for x: 3x + 7 = 22, and explain each step.”

Creative writing: "Write a 250 word cyberpunk story about an AI detective investigating a missing data case."

Planning and problem solving: "Design a startup business plan for an AI-powered customer service automation company, including target market, revenue model, and growth strategy."

What are we measuring?

I used Ollama, a popular LLM hosting application, to serve the models and provide a consistent suite of timing metrics. The specific metrics used were

eval_duration: How long it took the model to generate the response

eval_count: How large the response generated was

load_duration: How long it took to load the model into memory

Loading the model takes a long time for the initial run, so this lets us remove the outliers.

Results

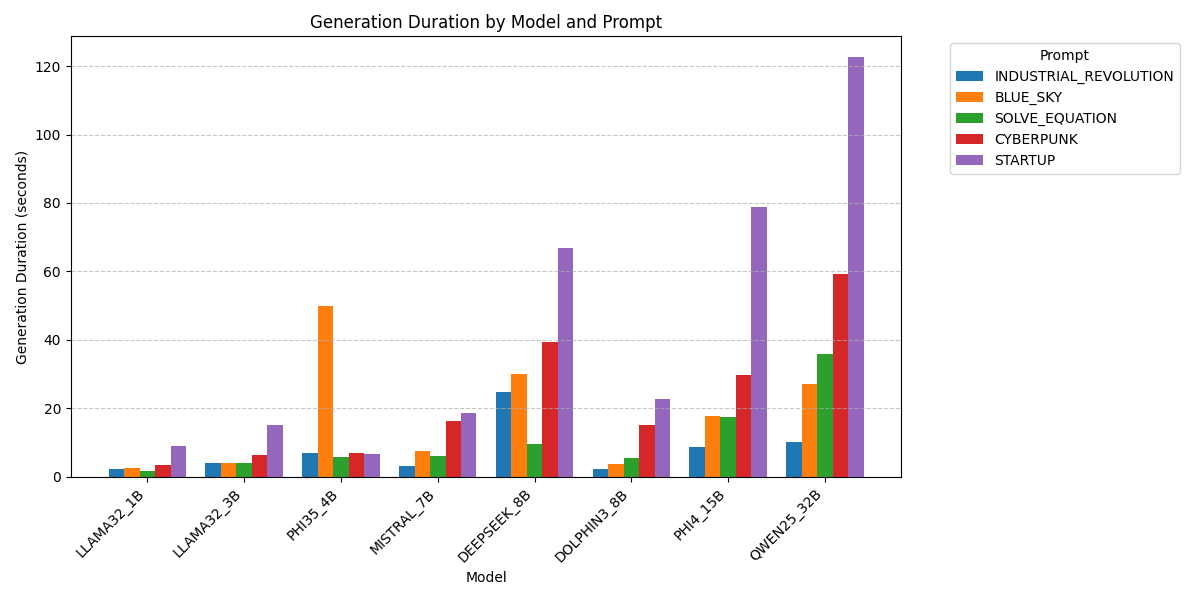

First lets look at how long each model took to respond to these prompts on average.

Our basic assumption is that since the larger models know more stuff, they’ll take longer to respond, which is clearly shown in the data here. The smallest model, LLAMA3.2 with 1B parameters, consistently generated responses in under ~3 seconds, while the largest model, Qwen2.5 with 32B parameters, took more than 2 minutes to answer similar questions.

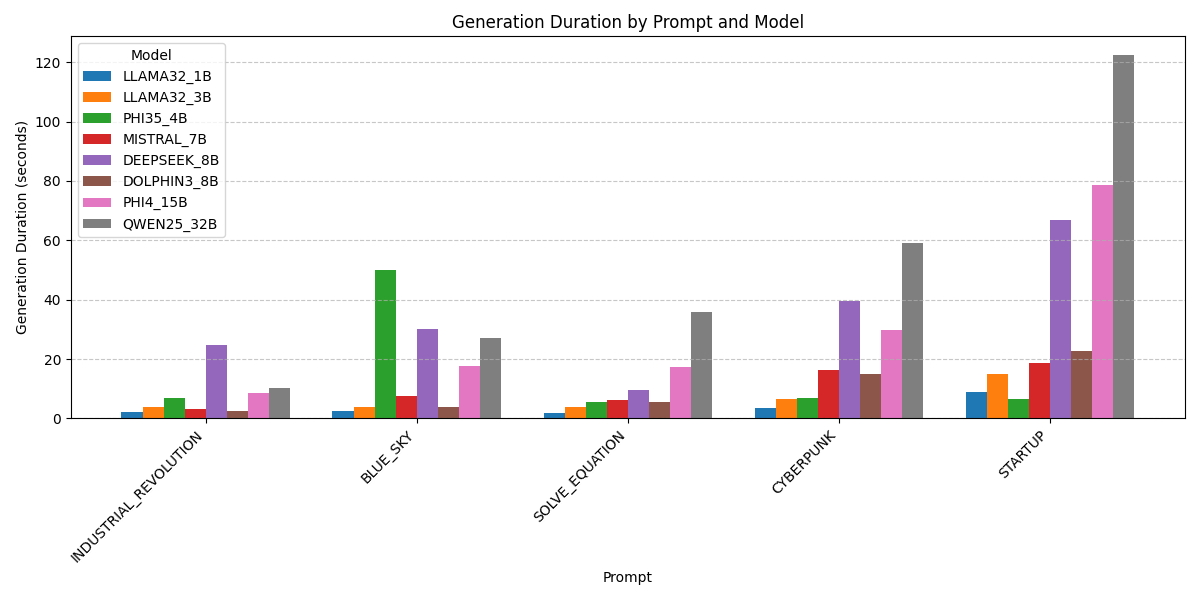

There are two specific parts to that graph that seem out of line with the others. Let’s re-arrange the data a little bit to see if it’s easier to see. Here’s the response times for each model grouped by related prompts.

Analysis

Phi3.5 and what it means to be blue

The BLUE_SKY prompt from the graph shows an outlier in the response times for the Phi3.5 model with 4B params. None of the other prompts for this model seem out of line with expectations. Can this model not explain simple science?

Actually, in this case the model was a little too good at explaining the science. A few of the responses contained lines and lines of generated text of the model twisting itself into knots to explain in simpler and simpler terms how light refracts in the atmosphere. These lengthy responses threw off the timing metrics.

In practice I could fix this by being explicit in my prompt about how much to explain.

DeepSeek and Dolphin

DeepSeek is an extremely popular model that was recently released by a Chinese company, and is known for being smart and capable. Compare DeepSeek (purple) which has 8B params to another model Dolphin3 (brown) that also has 8B params. If they’re the same size shouldn’t they run the same speed? Why does DeepSeek seem so much slower for every prompt?

That’s because DeepSeek been optimized for a different type of problem. Instead of trying to remember everything it knows about the prompt, DeepSeek tries to think logically about the question. Look how fast it responded to the SOLVE_EQUATION prompt! Every other prompt took longer than 20 seconds, but this one was only ~5 on average. Why is that?

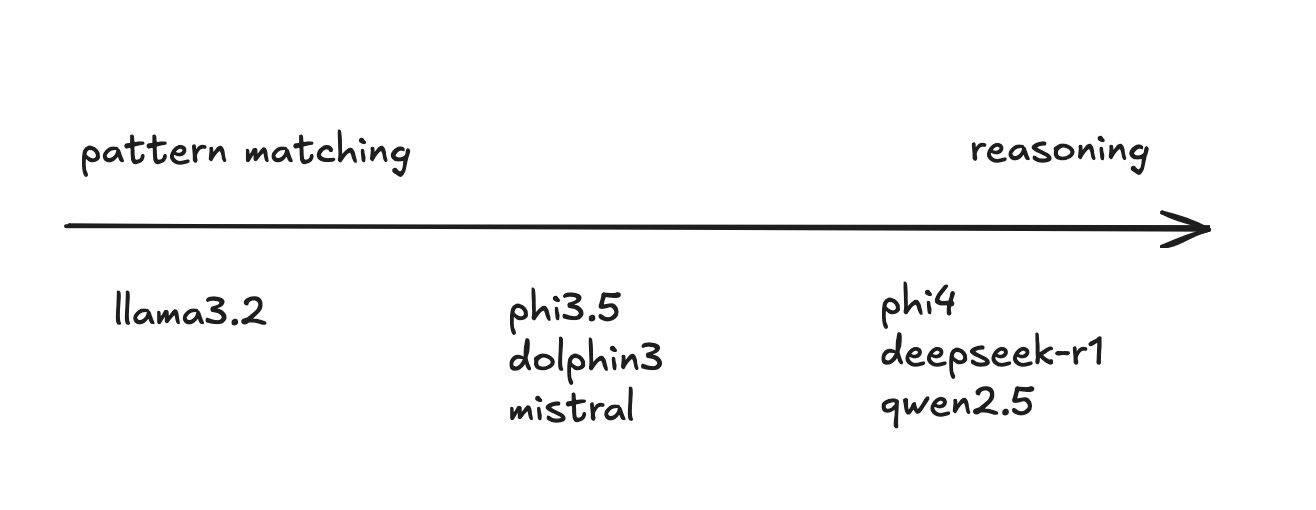

Pattern Matching vs. Reasoning Models

One of the most interesting aspects of this analysis relates to the architectural differences between models. Generally, models fall on a spectrum from pure pattern matching to more sophisticated reasoning. Here’s where each of the models I tested lies on this spectrum.

As models grow larger, pattern matching models start to lose their competitiveness when compared against reasoning models for the same task.

Imagine you're up on stage for Jeopardy and Alex Trebek asks you a question. Pattern matching models are great at the lightning rounds. They answer quickly, without spending time on deeper thought. Reasoning models are designed for the Final Jeopardy questions, where contestants are given extra time to think. This helps them answer more complicated questions with a deeper analysis.

Here’s a typical response for a prompt to DeepSeek. Notice that it shows you the thought process to arrive at the solution, and how clear and concise the solution is compared to the typical ChatGPT response.

<think>

To solve the equation (3x + 7 = 22), I start by isolating the term with (x). This is achieved by subtracting 7 from both sides of the equation.

Next, to further isolate (x), I divide every term in the resulting equation by 3. This simplifies the equation to (x = frac{22 - 7}{3}).

Finally, performing the division gives me the value of (x) as (frac{15}{3}), which simplifies to 5.

</think>

To solve the equation (3x + 7 = 22), follow these steps:

1. Subtract 7 from both sides to isolate the term with ( x ):

[3x + 7 - 7 = 22 - 7]

Simplifying:

[3x = 15]

2. Divide both sides by 3 to solve for (x):

[frac{3x}{3} = frac{15}{3}]

Simplifying:

[x = 5]

**Final Answer:** [5]Practical Recommendations

Based on the performance analysis, and some anecdotal testing over the past few days, here's how I plan to use these models locally.

The right size for my macbook seems to be about 8B parameters. There are a ton of models at this size that offer different tradeoffs in terms of complexity and runtime.

For general use, I run the Dolphin3 model locally. Primarily this is used as a brainstorming or thinking partner. This has completely replaced Rubber Duck Debugging for me in an unbelievable way.

For coding and scripting tasks, I run a specialized Qwen2.5 model. This model is a little larger, and takes a little longer to think through my questions, but will generate a higher quality output. Mistakes in code can often be subtle, and the best way to fix them is to not write them in the first place.

What size model are you running locally? Have you found the sweet spot for your hardware and use cases? Let me know in the comments!

Appendix

For those interested in the technical details:

- The complete code used for this analysis is available in this GitHub repository

- For more information on optimizing Ollama for your specific hardware, check out the official documentation.