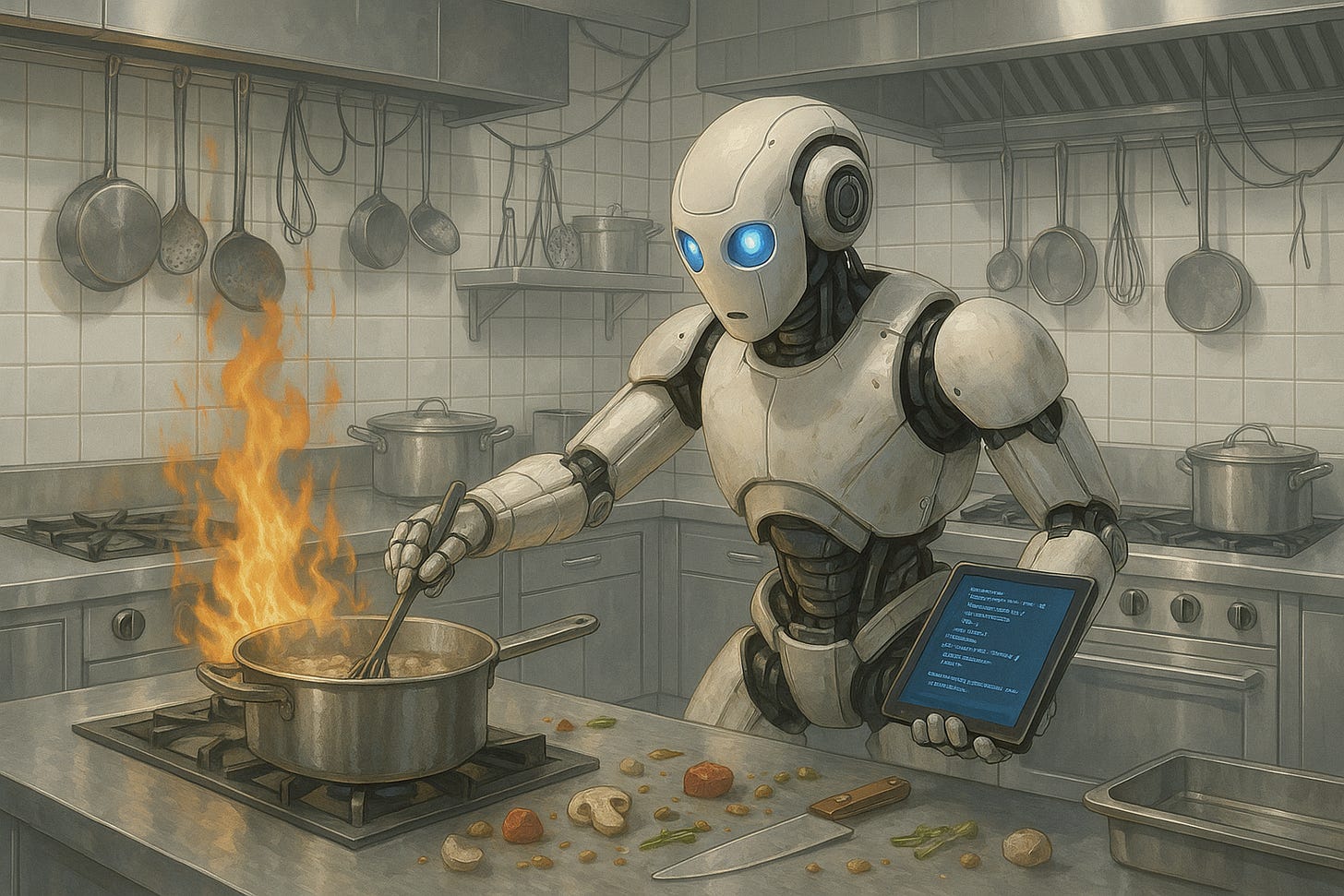

Structured Tools, Chaotic Kitchen

Giving Claude access to my system was easy. Making sure he didn’t fry the filesystem took more work.

Just a few years ago, writing code felt like being a line cook at an understaffed diner. You prepped every ingredient, grilled every burger, plated every dish, and hoped no one noticed when you burned the toast. Starting a new project in a different language? That was like swapping your spatula for a sushi knife. Same idea, entirely different muscle memory.

These days, I’m not the cook. I’m the chef. Maybe even the restaurant owner. The models are doing the chopping and stirring. I’m deciding what’s on the menu, how it should be plated, and what kind of decor the restaurant should have.

In an earlier post, I compared tool access to giving models a menu. That still holds true. But even the best menu doesn’t help if the chef doesn’t understand what kind of restaurant they’re running. What I’m realizing now is that clarity of purpose matters just as much as capability. Tools are only powerful when they are helping you progress in the right direction.

Now I’m not working the grill. I’m running the kitchen, thinking about what kind of experience I want to deliver while the models handle the food prep.

This post is about what it takes to run that kitchen well. How I’ve started to design systems, workflows, and expectations that make working with these models feel more like collaboration and less like cleanup.

He Can Chop, But Can He Plate?

These tools are incredible at wiring things up: infra, tests, helper functions, even error-handling boilerplate. With Windsurf, I went from “here’s a basic Python MCP server” to “this is production-ready” in about an hour. Code generation models are great at the how. They struggle with the what.

Fundamentally, good software comes from a consistent series of decisions that all point toward a goal. Some of those decisions happen deep in the code, like whether a method should require an authenticated user. But they ripple up into bigger product questions. Should free users have access to this feature at all? Should a server error kill the entire request, or log and continue?

These aren’t technical choices. They’re product choices. And the models don’t have the context to make them well. Developers who click “accept all” too quickly end up with a system full of quiet contradictions and accidental edge cases.

Even something as basic as file access can go sideways. In my MCP project, the model set up a sandboxed directory structure, and it mostly worked, until I realized it was letting users create their own symbolic links. A user could drop a symlink in their allowed folder that pointed straight to private files, and the model would helpfully load them. Just like that, a supposedly restricted environment had access to system-level config files. The model did exactly what I asked, but it had no sense of the broader boundaries.

One way to reduce this risk is by giving the model smaller, more focused tasks. But that only helps you catch the mistakes you’re already looking for.

The best developers don’t just write code. They shape the decisions behind it. That hasn’t changed, even when a model is doing the typing.

No, Claude, We Don’t Serve That Here

The real limit here isn’t just context size. It’s that the model doesn’t share the same kitchen rules. I have a mental model for how my service works, what patterns I expect, what tradeoffs I’ve already made, what assumptions are non-negotiable. But the model only sees a slice of the recipe at a time. It’s doing its best with what it’s given, but it doesn’t know why I made that design choice three features ago or what constraint it was meant to solve.

So I’ll fix a bug in one place, then run into a nearly identical bug somewhere else, only to realize the model forgot how we solved it the first time. Worse, it makes a whole new set of assumptions. Now I’ve got conflicting patterns, and “accept all” doesn’t give you enough context to catch it.

Windsurf and Cursor both let you tag specific files and say, “Write tests for @main.py using the async style from @test_service.py.” That nudges the model in the right direction.

What I really want is something deeper. Not just formatting, but institutional knowledge. How should tests behave? What counts as a proper permission check? What makes a comment useful or extraneous? I want the model to carry that worldview forward and treat it as canon, not suggestions.

Building the Kitchen Around the Tools

Whether it’s debugging or tequila, the truth remains the same:

One shot is never enough.

Working with these models has made that even clearer. The more time I spend pairing with them instead of coding solo, the more I realize I need a real workflow—something that lets me move quickly without losing track of the details.

The MCP filesystem example from the previous post gave me a clean foundation to explore how these models perform across a real system. In that post, I talked about giving the models a structured menu of tools. This workflow is the next step. It turns out, the tools alone aren’t enough if there isn’t a process for turning decisions into consistent results. What I needed was a system that didn’t just expose capabilities but also shaped how those capabilities are used across the entire project.

Here’s the four-step framework I’ve landed on so far. I’m still adjusting things as I go, learning what works, and changing how I work with each model as they evolve and add capabilities.

From Prompt to Plate

Shape the product

For the first pass I use ChatGTP o3 and Gemini 2.5 Pro. They’re both strong at exploring product goals, clarifying vague ideas, and identifying edge cases early. I use a prompt template to frame the problem and see what assumptions fall out. When one model raises a question, I’ll ask the other what it thinks. It’s like pair programming with two interns who love to argue.

Break it into features

Once I understand the problem space, I work with the same models to turn my big idea into discrete, shippable units. Think of this like breaking JIRA epics into manageable chunks of work. Pro-tip: explicitly ask the models about any implicit assumptions or dependencies being built into these tasks.

Build each feature and its tests

Claude 3.7 or SWE-1 (via Windsurf) handles the first pass directly in the IDE. Once the feature is done, I’ll have it also generate the tests for what it just built. That’s an easy way to surface any quiet assumptions it made along the way.

Pressure test the results

After the code is working, I’ll bring in a second model, usually Claude or Gemini, to review or refactor any quirky bits. Each model has its own opinion about what “good” looks like, and they don’t always agree. When one complains about the other’s code, that’s usually where something interesting is hiding.

Call It a Soft Opening

Every time I write about AI dev tools, I end up circling the same three ideas:

Don’t trust them

But wow, look what they can do

Seriously, don’t trust them

All of that is still true. But here’s what’s also true: I haven’t felt this creatively energized as a developer in years.

In my first post, I wrote about giving models access to real tools. What happens when you hand them a proper menu? In the second, I showed how I wired up Claude to my filesystem to start testing that idea in the wild. This post is about what’s happened since then, and how I’ve started building systems around the tools instead of just tossing prompts into them.

They don’t replace judgment. They don’t remember why a decision made sense three features ago. But they remove just enough friction to help you stop thinking like a technician and start thinking like a builder.

This isn’t a polished process. I’m still figuring it out, one messy commit at a time. But each project makes the next one easier. And with every mistake, I get a little closer to the kind of system I want to run, and the kind of chef I want to become.