I Just Wanted a Menu

Why MCP servers are the missing link between language models and real tools

A few years ago, my wife and I stumbled into a little seafood place in Split, Croatia. One of those tiny spots tucked down a side street with outdoor tables, starched red and white tablecloths, and no tourists in sight. The restaurant had an empty dining room, which lead into a open kitchen where an older man leaned patiently against an aging stove. His face lit up when we walked in. He rushed around to greet us with glasses of sparkling water.

“What kind of fish would you like today?” he asked.

We’d spent biking through Split, and the afternoon on a cocktail tour. Now, staring up at this kind-eyed gentleman, I couldn’t remember what fish were in season—or, frankly, what fish even existed.

“Uhh… a steak? With frites? Medium rare.”

The waiter’s eyebrows furrowed behind thick glasses. He scribbled something down and walked away, muttering under his breath.

In that moment I would have given anything for a menu. A list of choices I could point to—something structured enough to help me decide, but flexible enough to feel personal.

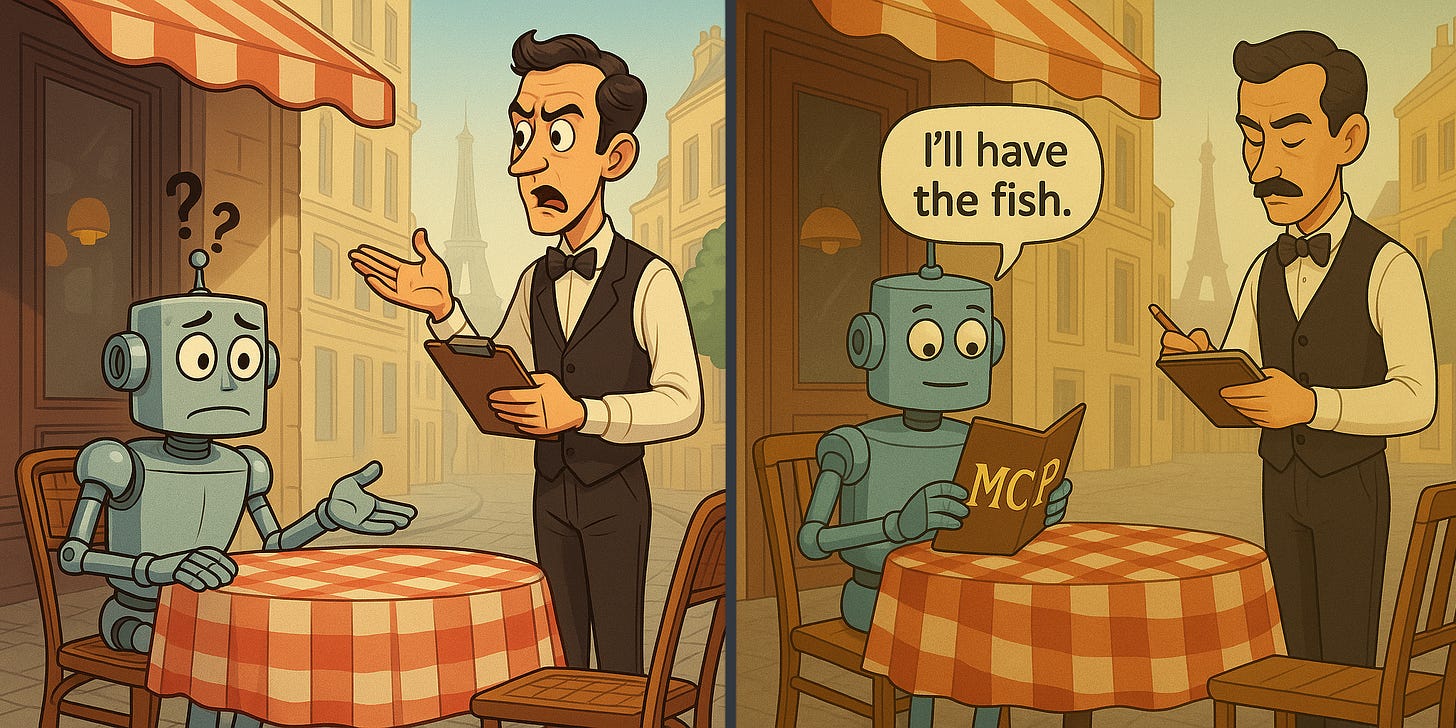

That moment of confusion is exactly what LLMs felt like before MCP: full of potential, but frustratingly opaque.

What is an MCP server?

Model Context Protocol (MCP) servers give language models a structured way to interact with tools. Instead of guessing how to do something like a web search or file lookup, the model is presented with a menu of available operations and what information is required for each.

MCP Servers help change the confusion on the left, into the conversation on the right.

A Menu For Models

In the past, if I wanted an LLM to help a user order food, I would have written a prompt like this:

You are a helpful assistant that turns hunger pangs into food orders for our kitchen to prepare. When a user orders food, generate a JSON object with:

- fish_type: the type of fish to be cooked

- preparation: how it should be cooked (grilled, fried, raw)

- sauce: optional, any preferred sauces or sidesNow if the user says:

“I want something light, maybe grilled, not too oily. I love lemon.”

We expect that the model returns JSON:

{ "fish_type": "sea bass", "preparation": "grilled", "sauce": "lemon" }

This works, but its fragile and relies on the model to juggle all the implicit constraints. Are fried sardines a valid fish option? Do we have Worcestershire sauce in the kitchen? What if the user wants fish sticks?

With an MCP server, the restaurant could expose a manifest like this:

{

"name": "order_fish",

"description": "Place a seafood order at a local restaurant",

"parameters": {

"type": "object",

"properties": {

"fish_type": {

"type": "string",

"enum": ["branzino", "sardine", "sea bass", "octopus"]

},

"preparation": {

"type": "string",

"enum": ["grilled", "fried", "raw", "baked"]

},

"sauce": {

"type": "string"

}

},

"required": ["fish_type", "preparation"]

}

}Now the model, and the restaurant, both have a common menu to work from.

Why Developers Love MCP Servers

MCP servers remove friction from developers. They help make LLM-powered software feel like an actual product, not just another hack-a-thon project that will die in an abandoned github repo.

Faster prototyping - register a tool once, and the model discovers the functionality automatically

Typed contracts - every interaction produces predictable JSON that can be validated in CI tooling

Swap-in tools - swap SQLite for Postgres or Snowflake without having to re-write your entire prompting layer

Safer execution - add rate limiting, authentication, and validation to the tool call

Simpler docs - JSON schemas are easy to parse for LLMs, and can be kept up to date automatically (unlike Confluence docs or README files)

Built-in automation - Chain tool calls together with an agentic LLM. The model orchestrates your business logic, instead of fighting with API syntax.

In short: less guesswork, more structure. Developers get the flexibility of language models with the predictability of real software engineering.

Why End Users Love MCP Servers (even if they don’t know what it is)

Easier access to integrations for developers leads directly to better products for consumers. Here are just a few real-world examples you can play with today:

Google Drive - The model can browse, search, and open your files from a connected drive. Want it to find your Q4 goals or summarize a meeting note?

Slack - Let the model summarize threads, schedule stand-ups, or draft posts in the right channels. It’s like giving your model a company badge.

Email - Triage inboxes, write responses, or flag urgent threads via standardized

GitHub - Open pull requests, summarize diffs, or review code comments. The model becomes part of the team, not just an advisor.

Spotify - Queue songs, build playlists, or adjust the vibe using actual API calls instead of simulated guesses.

One of my personal favorites goes beyond just tool access:

Memory - Build a persistent knowledge graph that grows as you work. It remembers details and decisions about your product without requiring constant manual context dumps.

What’s next?

MCP is quickly becoming the de facto standard for connecting language models to real tools.

If you’re building AI assistants or internal tooling, MCP makes your life easier. Full stop.

Next time: I’ll show how I wired Claude to a local MCP server so I can manage files, write code, and even edit my upcoming Substack posts in a consistent tone and style, without re-explaining myself in every new chat.

Found any cool use cases for MCP servers? Drop them in the comments below! I’d love to see what you’re building.