Build Better AI Prompts: The 6 Blocks Every Engineer Should Know

Simple blocks, repeatable structure, and feedback from the AI peanut gallery.

Watch enough 80s action movies and you start to see the blueprint. A brooding ex-mercenary with too many muscles and not enough therapy tries to walk away from a fight, until an unbreakable personal commitment pulls him back in. Sure, the details change from movie to movie, but the formula becomes impossible to unsee once you notice it.

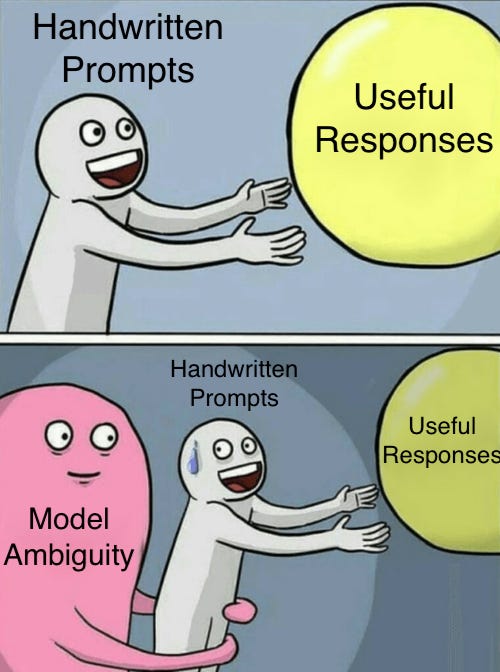

Prompting AI models works much the same way. Whether you realize it or not, you’re probably following a familiar structure: setting context, providing background, and asking a clear question. I recently came across Anthropic’s guide to writing strong prompts. It’s designed for Claude, but the patterns it outlines show up across every major model. They reflect a shared internal grammar that most LLMs seem to recognize.

In this post, we’ll break that structure down into a reusable prompt template you can apply across models and tasks. To see how well it holds up, I asked the Big Four—Claude, GPT, Gemini, and Grok—for feedback on what they would change. The core framework passed the test, but each model had strong opinions about what really matters.

The 6 Core Blocks of a Strong Prompt

All structures are built from a solid foundation, whether thats a blanket fort or the Taj Mahal. Each piece builds on the next in sequence, and missing any of them can result in unexpected destruction. While the ordering of these pieces isn't critical for all prompts, using them in this order helps the model understand clearly what we're asking it to do. As always you'll need to test with your specific use case to see the results!

Here’s the 7 building blocks used to create a personal shopping prompt.

1. Broad Role / Persona / Tone / Task

Every prompt starts with setting the scene. You’re telling the model what kind of role it’s stepping into, who it’s speaking to, and what kind of outcome you’re aiming for. Without this framing, responses can drift in tone or miss the point entirely. You don’t need a full character backstory, just enough clarity to lock in the right context.

You are a personal shopping assistant who specializes in answering users questions based on previous customer reviews of their purchase.2. Input Data

Once the model knows what role it’s playing, it needs something to work with. This is your source material—the raw context the model will draw from when answering. The more input you include, the earlier in the prompt it should appear. That way the model processes the data first instead of jumping straight to the question and backtracking.

Here are a selection of customer reviews for the chosen product:

<product_reviews>

- ⭐⭐⭐⭐ “Great sound quality and battery life. Case feels a little cheap though.”

- ⭐⭐⭐ “Fit is okay, but they fall out when I run.”

- ⭐⭐⭐⭐⭐ “Excellent for the price. Noise cancellation works surprisingly well.”

- ⭐⭐ “Connection drops often, especially when paired with a laptop.”

- ⭐⭐⭐⭐ “Solid fit and amazing sound, but case is bulky.”

- ⭐⭐⭐⭐⭐ “Love these. Super reliable and good mic quality for calls.”

</product_reviews>Note the (optional) use of XML tags to explicitly mark sections of content we want the model to pay attention to. This can be extremely helpful when re-using template prompts where the input data isn’t known ahead of time.

3. Examples of correct responses

Examples help calibrate the response, especially when you’re dealing with nuance. Without them, you’re leaving it up to the model to guess what you mean by words like “helpful,” “brief,” or “clear.”

Even one or two well-chosen examples can anchor the task.

<example>

<question>

Are these headphones good for running?

</question>

<answer>

**Short Answer**: Not ideal for running.

**Why**: One reviewer said they fall out while running, and no other reviews mention good fit during exercise.

**Quote**: “Fit is okay, but they fall out when I run.”

</answer>

</example>4. Detailed Task or Question

Now that the model knows who it is, what data to use, and what a good answer looks like, it’s time to get specific. This is where you ask the actual question or define the task. Like with any type of question, the more clearly you explain yourself, the better the results. Vague prompts produce vague answers.

Write a clear, concise answer to this question:

<question>

Are these headphones good for travel?

</question>5. Output Formatting Instructions

Don’t leave formatting up to chance. If you want the output in a specific structure, say with XML tags or bullet points, spell it out. This helps ensure the response is consistent, scannable, and easier to parse, especially if you’re feeding it into another computer system for further processing. It’s also a good place to define what the model should do if it’s unsure. Pro-tip: double check this format matches with the examples you used previously!

Respond using only this format:

<answer>

**Short Answer**: [consise answer]

**Why**: [1–2 sentence explanation]

**Quote**: [Relevant quotes]

</answer>

If there is not sufficient information in the compiled research to produce such an answer, you may demur and write "Sorry, I do not have sufficient information at hand to answer this question."6. Precognition or thinking instructions

This block is optional, but powerful. Use it when you want the model to follow a specific reasoning path before answering. Good examples are extracting quotes from the input data, weighing pros and cons, or scanning for patterns. Think of it as prompting the model to “think out loud” before giving you its final answer.

It’s especially useful when accuracy matters or when you’re working with dense input data. If you only need the answer to have the right vibe, you likely don’t need it. But if you want grounded reasoning, sourced output, or better consistency across responses, this step makes a big difference.

Before you answer, pull out the most relevant reviews from the data into <relevant_reviews> tags. Then answer the question.Putting it all together

Here’s that same prompt put together neatly so that you can give it a quick copy+paste in your next prompting session.

You are a personal shopping assistant who specializes in answering users questions based on previous customer reviews of their purchase.

Here are a selection of customer reviews for the chosen product:

<product_reviews>

- ⭐⭐⭐⭐ “Great sound quality and battery life. Case feels a little cheap though.”

- ⭐⭐⭐ “Fit is okay, but they fall out when I run.”

- ⭐⭐⭐⭐⭐ “Excellent for the price. Noise cancellation works surprisingly well.”

- ⭐⭐ “Connection drops often, especially when paired with a laptop.”

- ⭐⭐⭐⭐ “Solid fit and amazing sound, but case is bulky.”

- ⭐⭐⭐⭐⭐ “Love these. Super reliable and good mic quality for calls.”

</product_reviews>

<example>

<question>

Are these headphones good for running?

</question>

<answer>

**Short Answer**: Not ideal for running.

**Why**: One reviewer said they fall out while running, and no other reviews mention good fit during exercise.

**Quote**: “Fit is okay, but they fall out when I run.”

</answer>

Write a clear, concise answer to this question:

<question>

Are these headphones good for travel?

</question>

Respond using this format:

<answer>

**Short Answer**: [consise answer]

**Why**: [1–2 sentence explanation]

**Quote**: [Relevant quotes]

</answer>

If there is not sufficient information in the compiled research to produce such an answer, you may demur and write ""Sorry, I do not have sufficient information at hand to answer this question."".

Before you answer, pull out the most relevant reviews from the data in <relevant_reviews> tags. Then answer the question.What the Models Said

I asked Claude, GPT-4o, Gemini, and Grok to review this intentionally minimal prompt and offer feedback on what worked and what could be improved. Unsurprisingly, all of them agreed it was a solid starting point. But interestingly, each one had a different idea of what could make it stronger. To make sense of those differences, I assigned each model an archetype that reflects how it seemed to approach the problem.

Across the board, the models praised the prompt’s structure and clarity. Most emphasized the need for more explicit instructions to interpret and synthesize the input, rather than just rephrasing it. That lines up with what these models do best: processing large amounts of context and delivering grounded, helpful answers.

What surprised me was that each of the models had a different focus area for improvements. Some responded with direct, tactical edits I could immediately use. Others offered broader conceptual suggestions. A few focused on ambiguity and edge cases, proposing ways to handle incomplete or unclear input more gracefully.

Four AI Coworkers Walk Into a Prompt…

Each model brought a distinct personality to the table. It felt less like testing software and more like running a design review with four very opinionated coworkers. One sees prompts as architecture. Another flags ambiguous requirements. One rewrites your design for clarity while you’re still explaining it. The last one challenges your core assumptions just to see if they still hold up.

And yet, despite their differences, they all agreed on one thing: strong prompts aren’t accidents. They’re built.

This exercise showed that well-structured prompts—like well-structured code—are modular, readable, and reusable. These six building blocks won’t guarantee the perfect response every time, but they give you a blueprint that works across tools, use cases, and model temperaments.

In the next posts, we’ll dig deeper into each model’s feedback and explore what it reveals about their internal logic and design assumptions. Think of it as personality typing for LLMs: practical, a little nerdy, but surprisingly useful.

Until then, try the prompt. Tweak the blocks. Break them. Rebuild them. That’s how you go from guessing to engineering.