Boxes, Arrows, and Ambiguity

Inside the quiet panic of system design interviews, and how to make sense of it.

You’ve redrawn the same shaky boxes four times. Each arrow gets labeled with even more precise naming. Anything to fill the silence.

On the other side of the Zoom call, your interviewer hasn’t moved his mustache in twenty minutes. The only thing he’s done is paste four lines of text describing the system you’re supposed to design.

You keep glancing for a cue, a nod, a raised eyebrow, a facial twitch of approval, but there’s nothing.

So you start talking faster. Buzzwords fire off like flare guns: redis cache, load balancer, randomly distribute your keyspace by… The silence swallows each thought whole and somehow grows larger.

What do you focus on next?

I used to fight the silence by erasing and redrawing boxes, as if that small act of control could make up for all the verbal flailing. System design interviews are open ended by nature. There is no clear goal or endpoint. You might talk about database sharding, session load balancing, or Redis partitioning. Then the meta questions begin. Why Redis? Why a read cache at all? Why even a read endpoint? Do customers really need this data that badly?

These days, I understand more what these interviews look for because it is the same skill you use every day as an engineer: learning how to move forward when things are unclear.

How do you know you’re on the right track when the world refuses to tell you?

Every system design interview begins in that vacuum. There is no feedback, no validation, and no guardrails. Somehow, you still have to make progress.

System Design is just Engineering

Turns out I actually like System Design interviews a lot more than LeetCode once I figured out a couple of things.

Real work is ambiguous:

Here’s a question I got all the time at my last job: ‘Could we scale that Kafka topic 10x tomorrow?’

It is an ambiguous question, one I had no idea how to answer at first. But if I step back, I can list all the things that should make me nervous.

Is this 10x my peak volume? or steady state?

Is this one time event data load, or the new baseline?

Is the data evenly distributed, or could there be hot spots?

Does quick math show I have capacity in my fleet to scale this hard?

In the end, those answers decide whether the system holds or collapses. It takes experience to know what should worry you, and even more to know how to fix it. But most of all, it takes curiosity and the instinct to stop and ask the right questions.

Feedback is delayed:

How do you know your last project was going to work before it launched? You wrote the requirements, shipped the code, deployed the artifact. Were you confident it would hold up? Why?

Software is one of the cruelest worlds to work in. The freedom is boundless, but boundless freedom is easy to get lost in. Every choice is a tradeoff, some subtle and some sneaky, and you only learn to spot them through experience. It is hard to tell the difference between made the right call and made something that worked for now.

Code is easy, right? Write some tests, get the green check, move on. But software is bigger than a green checkmark. It is your job to build the feedback loop that tells you whether the whole thing works, not just the parts.

The silence in a system design interview is not a trap. It is a mirror. It reflects what engineering often feels like: no dashboards, no post-mortems, no logs. Just uncertainty. The best engineers learn to build their own feedback loops and trust them.

Build your feedback loop:

A feedback loop is how you stay confident you’re building the right thing. It should be flexible enough to adapt, but rigid enough to keep you moving.

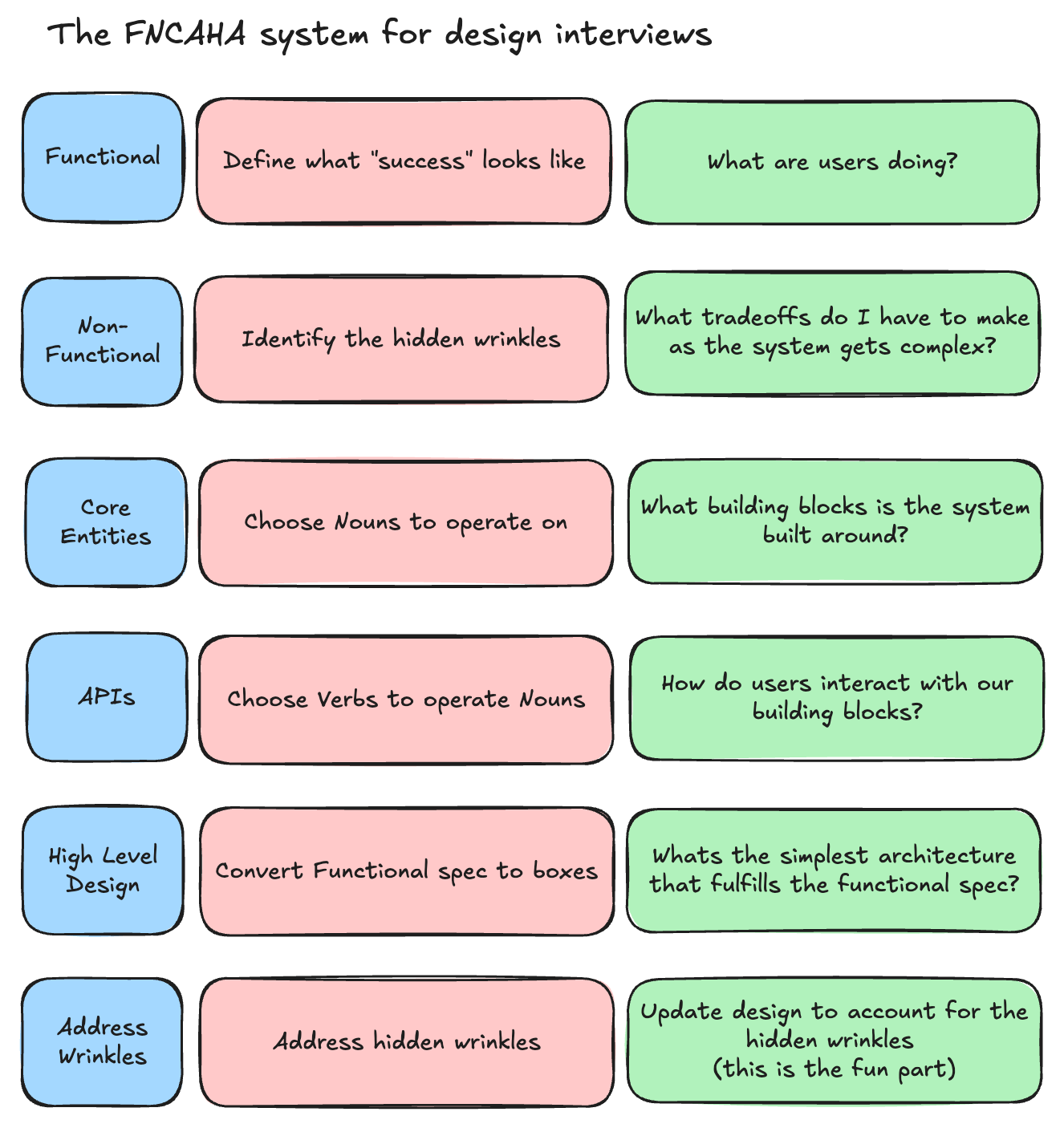

One of my favorite feedback loops for system design problems is the FNCAHA framework. I’m sure the folks over at HelloInterview, who I borrowed (cough: stole) this from, have a catchier name for it. I use it in every interview because it gives me six trail markers that guide me toward a working design. They don’t give you the answer, but they help you find the right questions to ask. Even dim trail markers keep you calm and moving forward.

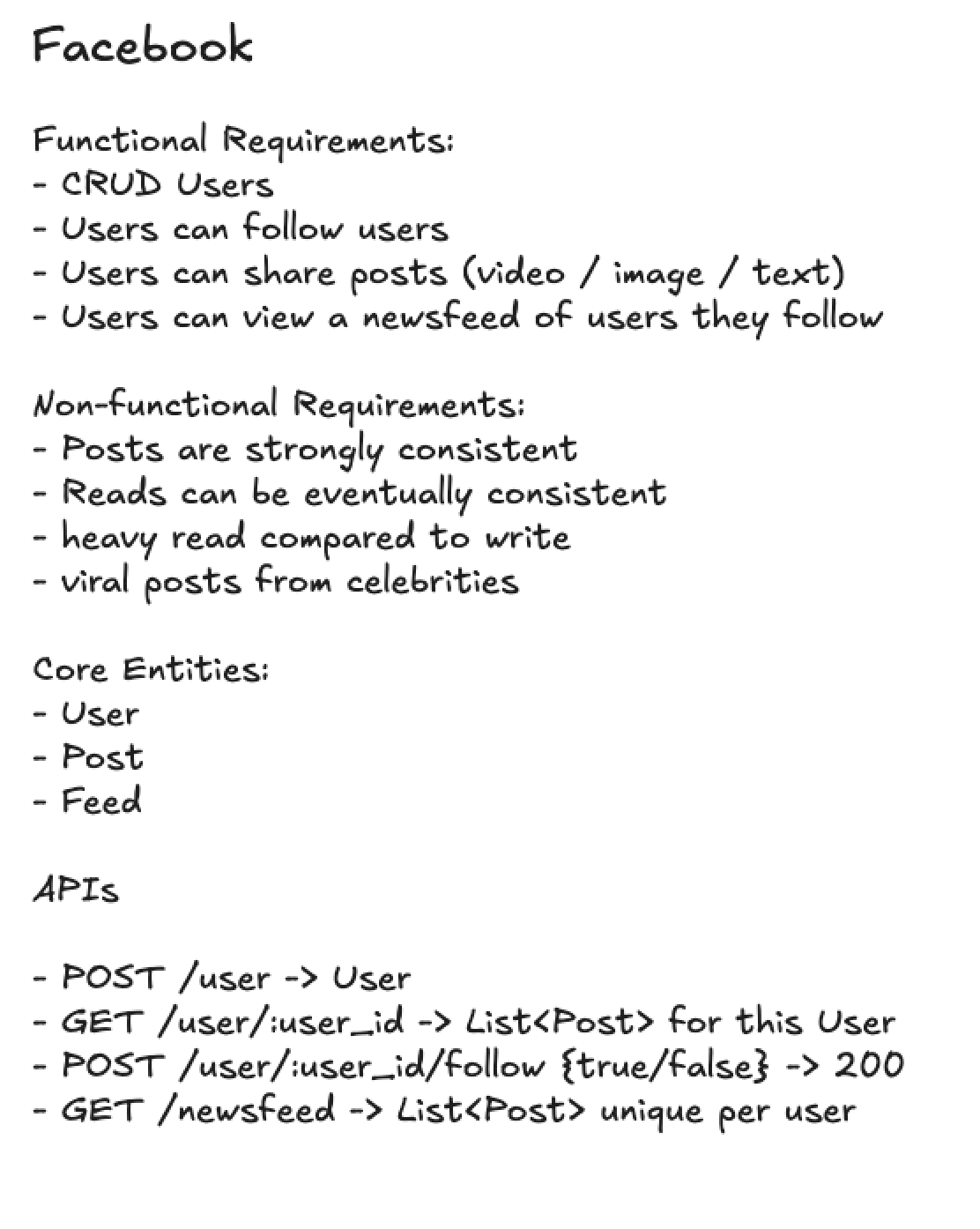

Here’s a design I built for a NewsFeed service like Facebook. The first section shows the first four parts of the FNCAHA system. Each part builds on the one before it. Together, they form your contract with the interviewer. If it’s on this list, it goes into the system. If it isn’t, or if it’s explicitly marked “below the line,” we leave it out. No boxes, no data specs, no wasted time.

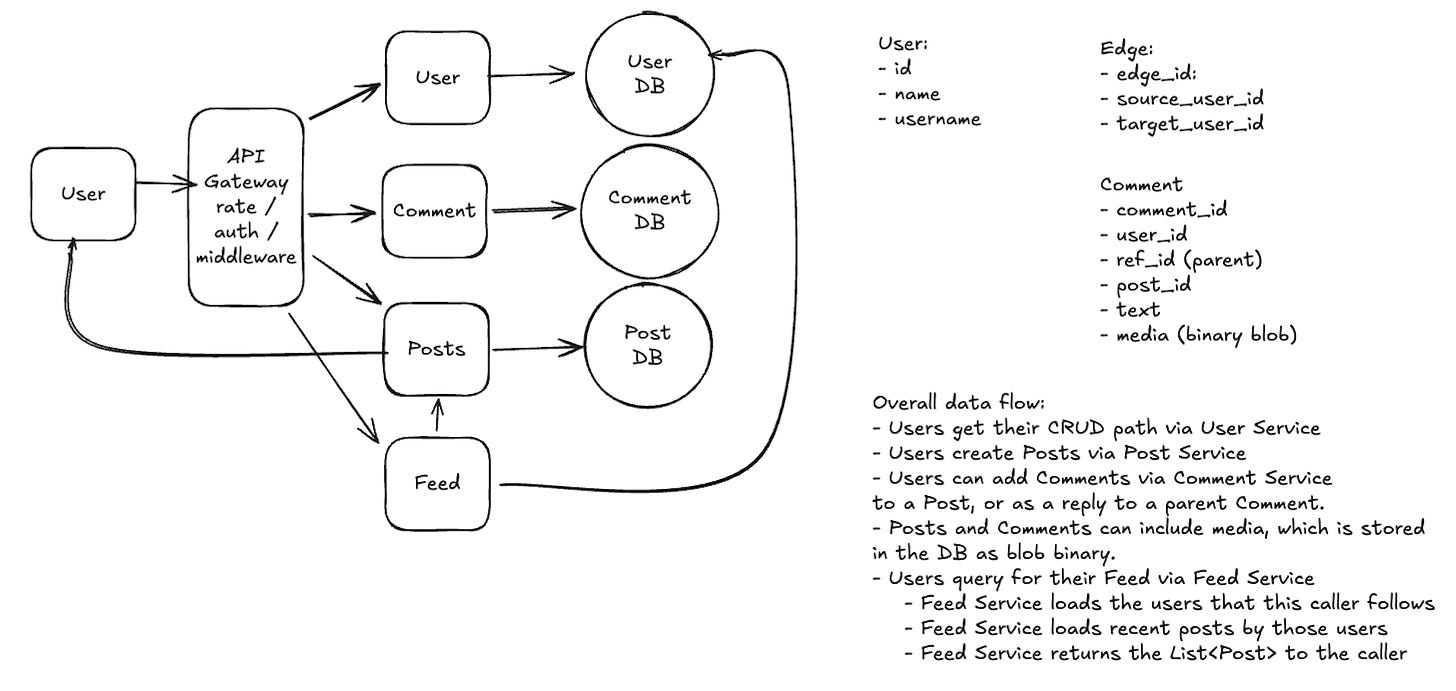

See how the functional requirements and APIs translate directly into the services and systems I built. One for one. Like magic. The design works because it matches exactly what we agreed to build earlier.

Now let’s revisit our non-functional requirements and look for a few wrinkles to smooth out.

A note about Wrinkles

A wrinkle is the thing that makes a problem hard or interesting.

Imagine you run a small online forum for a niche community that suddenly goes viral. Unfortunately this has also brought a lot of spam and invalid posts. Do you:

allow anyone to post anything they like, and remove bad content later

put every new post into a moderator queue and manually approve them before publishing?

You can argue either way, but you need to choose and explain why.

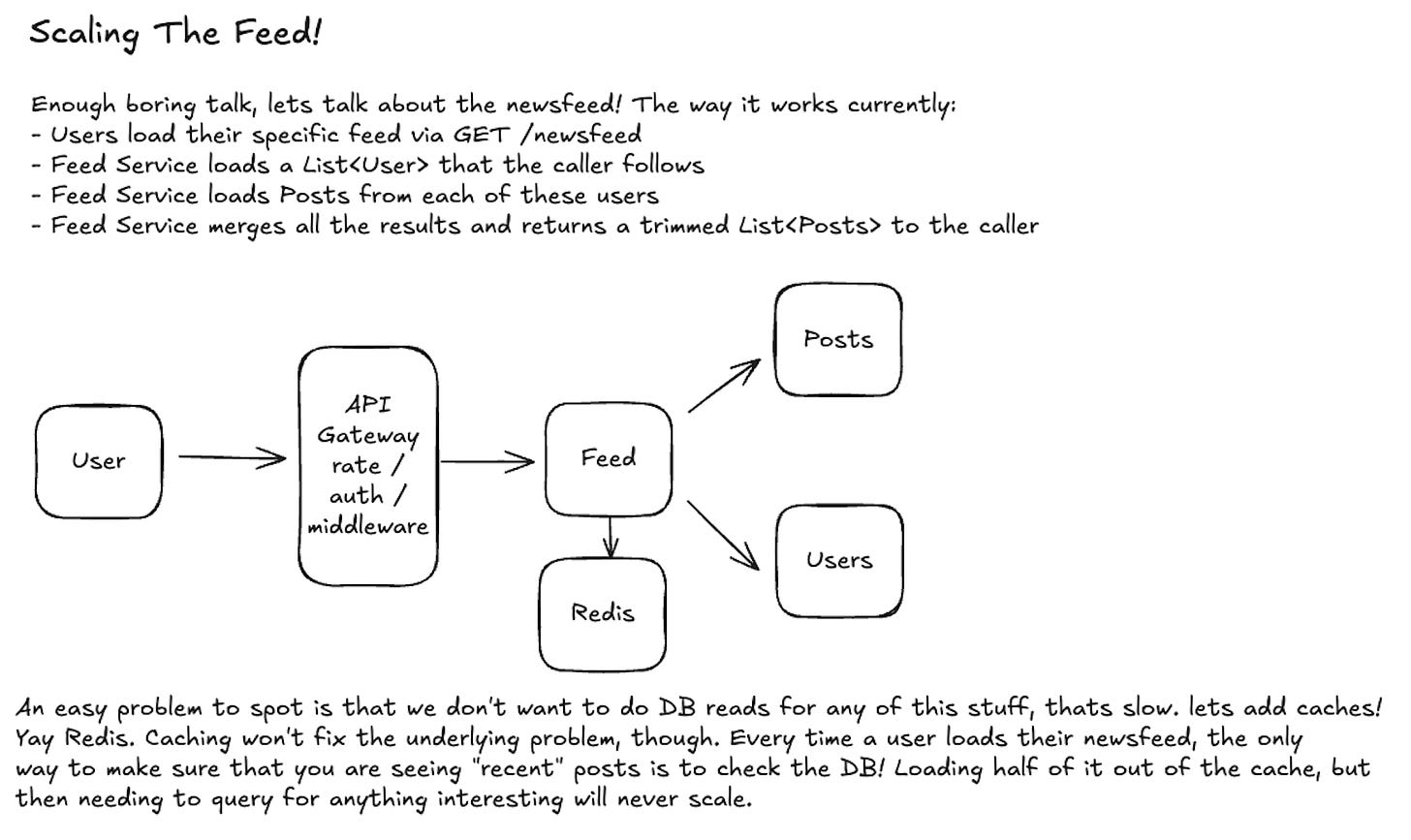

For the Facebook design above, one of the non-functional requirements was that the system would take heavy read traffic compared to write traffic. The design as it stands cannot handle that load. Let’s look at why.

Oops. My caching strategy doesn’t actually work the way I thought. Time to rethink it. (Spoiler: the fix is pre-computation.)

This caching issue wasn’t something the interviewer planned to discuss, but it’s a real-world problem that would surface as the system scales. The only reason we caught it was because the our guide posts pointed us back to the trail. Are we still solving the problem we set out to solve?

That is the real signal. Not perfect boxes, but a process that keeps thinking inside the silence.

Speed up your feedback loop

Like most things I talk about these days, the answer is simple: use more AI. Take a screenshot of your design, drop it into ChatGPT, and ask for feedback at the level you’re aiming for.

Mid-level engineers can get critiques on service boundaries or database choices.

Seniors can stress-test scaling assumptions or schema tradeoffs.

Staff-level folks can get pressed on business metrics, IAM policies, and all the gritty details that usually show up in production reviews.

It’s like having a virtual staff engineer who’s patient, fast, and endlessly curious.

Here are two ways I’ve found it most useful.

Get feedback on your design

Explain why you made a choice and what constraints you were working under. The model will surface missed requirements, highlight weak assumptions, and suggest alternatives you might not have considered. It is not just “Is this right?” or “Would this work?” It is a way to pressure test your design across multiple scenarios before the interviewer ever asks.

After showing chatGPT an early version of my high-level design, here is the top-line response it gave me:

At small scale, this pull-based feed design is simple and correct, but I’d evolve it to a fan-out-on-write model backed by a cache and async queue to handle high read volume and celebrity posts efficiently.

That insight came directly from thinking through the non-functional requirements and how they would impact the system.

Explore tradeoffs in real time

Ever wonder why you chose MySQL instead of DynamoDB? Not just “because it is relational,” but the real use cases where one fits better than the other. Or when Redis might actually make more sense.

Before, the only way to get good answers was to sift through blog posts and half-relevant docs until you stumbled on a decent comparison. Now you can just ask the model.

“What kind of data would make DynamoDB a better fit?”

“How does geo-hashing actually work under the hood?”

“What would have to change for my choice to also change?”

Faster loops

You get clear, contextual explanations at exactly the level you need. For me, that has been transformative. I am no longer guessing which keywords to Google or waiting for a senior engineer to have time. The tight cycle between question, feedback, and insight scales the practice far beyond a single interview.

There aren’t many silences in my interviews anymore. Partly because I’ve practiced sounding human again: talking about the weather, pretending latency is just normal small talk. But mostly because the FNCAHA framework gives me something to hold onto. Clear guideposts that help me move forward when no one is telling me what’s right.

That same structure makes AI tools even more powerful. I can drop a design into ChatGPT, ask for feedback at the level I care about, and get reflection in seconds instead of waiting days for a review. It is all about shrinking the distance between an idea and understanding.

The best engineers I have worked with share the same instinct: shorten the loop, ask questions sooner, surface tradeoffs earlier, and learn faster.

AI just makes that instinct visible. It is a mirror that rewards curiosity instead of perfection.